Statistical Mathematics for dimensionality reduction in data science.

What I will discuss:

- What is feature engineering and dimensionality reduction

- Variance and Central Tendency (Mean)

- Covariance

- How can you use this in Machine Learning

- Conclusion

Feature Engineering and dimensionality reduction

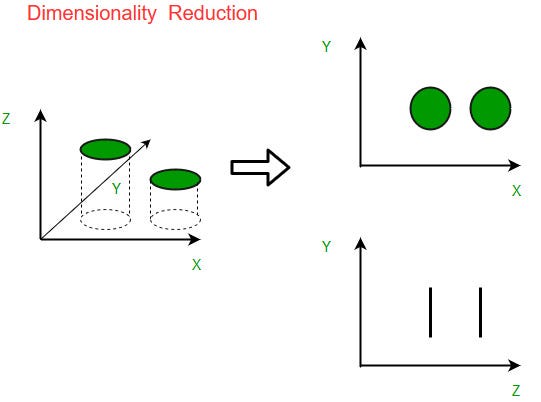

Feature engineering is probably one of the most crucial step that you need to take before processing the input matrices of features in Machine Learning model. Feature engineering aims at figuring out the relations and properties of different random variables or features in dataset. Random variable is simply the set of outcomes of non-deterministic machine or random experiment(like throwing a dice). This can likely help us in removing the features that do not contribute in target variable. This is also called dimensionality reduction. Dimensionality reduction is a must skill to have for data science and machine learning. It also reduces time and space complexity of algorithms by considerable amount.

Dimensionality reduction is categorized as — supervised and unsupervised. I will be putting light on supervised technique here. Unsupervised technique consists of algorithms like Principal Component Analysis (PCA) and Singular Value Decomposition(SVD) that I will discuss in upcoming blog.

Variance and Central tendencies

Central tendency is the typical value of some probability distribution that attempts to describe the data in a single value. They are mean, median and mode. Most common central tendency is a familiar term mean. Mean or average is the sum of all values in given data divided by the number of data points.

One common data distribution is gaussian distribution (or normal distribution) that I take as an example here. Gaussian distribution makes a bell-shaped curve when probability density vs data points graph is plotted. The central line is the mean.

Variance is simply the average of squared differences from mean. We take square so that negatives are not cancelled by positives. This simply means — on an average, how far data is spread from mean value. The mathematical representation of sample variance is

Covariance

Like variance, covariance is also the measure of how far data is distributed, but takes two variables into consideration. The formula remains same and just one of the ‘x’ in squared term is changed to ‘y’ because here we have two random variables.

We can notice here that if (‘x’-x) is positive and (‘y’-y) is negative, the random variables are inversely proportional to each other. Therefore, they have negative covariance. If both are positive, one increases with another and covariance is positive. At last, if any of them is zero means one has no effect while other is increasing or decreasing. This implies no relation between two random variables.

The covariance method is also available in Python Numpy library that takes two random variables as argument and gives result.

How to use this in Machine Learning.

The covariance can be found out between target variable and any other random variable or feature by several python methods and libraries (Matplotlib, Numpy..). The variables that have covariance very close to zero can be removed from dataset because they do not have an impact on target variable. For example, when you are predicting whether a customer will buy some product or not, we can drop customer ID. Note that two variables that are linearly dependent on each other can also create problems. It is always good to drop one of them. For example, meter and feet are linearly dependent and means same thing which is distance. We can drop one of them.

Conclusion

Dimensionality reduction simply means reducing the number of features that can create problems for our model. For this, you need to do some statistical Maths and make your model run efficiently. Other techniques for dimensionality reduction are yet to come.